From the Editor

by

Jeff Georgeson

previous next

full contents

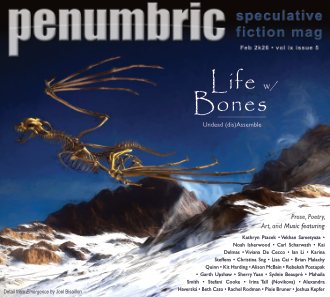

Bones

From the Editor

by

Jeff Georgeson

previous

full contents

next

Bones

From the Editor

by

Jeff Georgeson

previous next

full contents

Bones

previous

full contents

next

Bones

From the Editor

by Jeff Georgeson

From the Editor

by Jeff Georgeson

It’s your* ninety-ninth rejection. You’ve seen too many comments: “I’ve seen this before”; “Nothing new here.” You begin to believe “I have nothing new to offer the world.” Or conversely, you think, “These idiots! Everything I have to say is new! And wonderful.” I’ve had both these mindsets. Neither is ultimately helpful.

The latter of these is sometimes an ego survival instinct and isn’t helped by the fact that we live in a world that encourages us to forget what happened yesterday and to pay attention only to the now; is it any wonder that we recycle even recent ideas? Which is so weird; the half-life of cultural artifacts/ideas seems to be shrinking rather than expanding, which is the opposite of what you’d expect it to do given the availability of basically all of film and written history at our fingertips; it’s like the availability of more knowledge makes us want it less, or to be overwhelmed maybe and then pointedly ignore it all. Which sucks; I look at this expansion of knowledge as such an amazing opportunity, and what I’ll call the “social media world” wants to reinvent fire every five seconds (and wants us to be educated only on cat memes; but that’s another discussion). We’re encouraged to have an attention span no greater than a few minutes; we’re encouraged to think everyone much older than our attention span is a different generation (well, hyperbole, but more and more true), to be marketed at (rather than “to”) in a different and siloed way. Which just makes it easier for us to dismiss any ideas older than today years old and think everything is squeaky new. And then be crushed or put up our ego defenses when we discover that they aren’t (if we come out of our cocoons long enough to discover this).

I understand both the desire to put one’s stamp on the world and the crushing depression brought on by thinking one’s ideas have all been done before. Just one of many examples is my Master’s dissertation.** When I first started it, I was sure I had an idea no one had thought of before—I wanted to create human-like personalities for NPC characters. Yet as I looked through the papers written by others, I discovered I wasn’t the first to travel this road. I lamented this to my advisor, thinking I had to abandon hope and kind of wishing I’d never looked for these other works, and he said to me, no, this is good! It means you have a base to go from, an academic foundation to build on. You just have to work at developing your idea, but using what you’ve learned as a springboard (or something like this; I’ve forgotten the exact discussion).

I think this is still good advice, and it’s good advice for things outside academics; being aware of the stories that have been written before yours isn’t a death knell for your writing; instead, think of it as an opportunity to refine your ideas, to maybe take those other stories as what has been said before, but here’s your twist on that. Not every story has to be The Next Original Idea; maybe it presents an idea in a new way, or to an audience that might not have thought about it before.

I suppose Hollywood would see every remake as doing this, which is why they seem to market everything as “the [whatever] for a new generation” or some such malarky. But I’m saying you build on what came before, not just do it again (and not just paying “building on the idea” some sort of lip service that you think will market well to today’s audience).

And although it’s just easier to pretend that nothing like your thoughts has ever happened before, and thus any idea you have is the Coolest Ever, doing the harder work of knowing what came before and honing your thoughts using that will let you say or do something that stands a chance of lasting more than the next twenty-four hours, when tomorrow’s new generation just says the same thing about your work that you said today and pats itself on the back.

And honestly I think it is just better to know things—old things, last year’s things, last decade, last century. This is how we build civilization rather than tear it down, break cycles rather than relive them, grow as people and as a world.

Jeff Georgeson

Managing Editor

Penumbric

* I’ve written bits of this in second person, but obviously, if you don’t resemble the “you” in these remarks, please don’t take it personally.

** Yes, dissertation, not thesis; this was in the UK, and the terms are a little different than in the US.