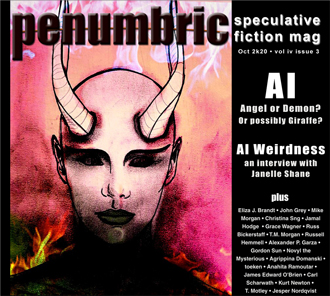

In this issue of

Penumbric we immerse ourselves in artificial intelligence--both the classic and the ubiquitous. Classically, of course, we have what is more technically called "general artificial intelligence" or even "strong AI"--the Terminators, Mr. Datas, and Roy Battys of the world. And the ubiquitous? The world we live in, where "big data" is being fed to countless algorithms that try to figure out the best way to drive a car, build a shopping complex, or just choose between several colours of clothes to wear--or, on a more Terminator-esque note, influence our politics, get us to choose products we don't actually need, or recognize our faces as we walk down the street. But these last examples are not so much about the AI itself--which, after all, is just an algorithm and not a self-determining creature--as about what we, as humans, want to do with such things. And we humans aren't always the most enlightened of beings.

We talk about the various AIs, how they're used, and the ethics of creating anything even remotely like us in the first article in this issue, so I won't go into it generally here, but I will talk for a moment about my own experience in this field. I develop human-like personality systems for non-player characters in games. I began my foray into personality AI with an almost unfettered belief that it was overall a good thing, that it might be misused, as many scientific advances are, but that ultimately it would be used for good, that we would have tremendous opportunities to learn and to teach each other and that technology and social advances would follow at great speed. I now feel I vastly overestimated our own ability to get along with others; we don't want to even allow people in need to cross our human-created borders, let alone help them. In a time when we're as xenophobic as ever, and when it looks like the highest court in the US will roll back people's rights rather than add to them, it seems folly to bring into the world a creature that will be treated as a machine-slave, that will learn only that it is an Other, and that, if it mimics humans at all, will decide that we are Other, too, and dangerous to have around. I certainly don't agree with all the media-created fear that any and all General AI is going to be SkyNet, and I don't feel that my own tinkering in game systems is going to directly and in my lifetime lead to General AI, but I can certainly imagine that if I'm doing it, there are probably much better resourced groups making even better progress, and ... well, it seems weird feeling I might have to choose to fight against the creation of such AI rather than root for it. Somehow my optimism that we can create strong AI in an intelligent manner that benefits both us and them seems like wishing for world peace.

And how I, or even other AI developers, feel about it isn't the most important thing anyway. There are many stakeholders that should be consulted before General AI is built, including those whose jobs are affected/taken over by such creatures, from factory workers to sex workers--and not just because their livelihoods would be affected, but because they can talk about the conditions such artificial workers are likely to face, and what kinds of treatment--up to and including indentured servitude and abuse--they are likely to endure, and perhaps rebel against. It will take more than just the Victor Frankensteins and others in their ivory towers to successfully develop--or not develop--General AI. It will take the villagers as well.

Getting back to the AI we have today, we spoke with Janelle Shane, author of

You Look Like a Thing and I Love You, about the kinds of AI weirdness we already see in the algorthims we create now. If you like giraffes, you'll love AI ... which isn't the non-sequitur it seems. We also talked about bias in AI algorithms, humans pretending to be AI, and the future of AI.

The main body of this issue is chock full of AI goodness (and badness, as it were). We start with Eliza J. Brandt's "Will You Miss Me When I'm Gone?," "Avatar Love" by Russell Hemmell, and Mike Morgan's "Something to Watch Over Us." "Assembly" by Russ Bickerstaff is more ambiguous, and "Cusp" by T.M. Morgan takes us in a very different direction. And there's an AI game show host in "Dr. Know-It-All" by Gordon Sun and an AI enjoying a drink in "Perfect Daze" by James Edward O'Brien.

As in all things, however, there is more than just a singular focus on AI. "The Dog Lover" by Agrippina Domanski is disturbingly good, and the strong poetry of Grace Wagner ("Nuclear War"), Jamal Hodge ("Of Other Worlds"), and Kurt Newton ("Deli Fishing"), all of whom appeared in the August issue, is joined by that of Alexander P. Garza ("Interstellar Affairs") and John Grey ("Tracking the Clozxil").

The artwork in this issue is an awesome collection of different and differing works, including Carl Scharwath's "She Is Watching You" and Anahita Ramoutar's "Entangled," toeken's "Stapmars" and Christina Sng's "Snowstorm," and Novyl the Mysterious's "Lycos of the Night" and cover art "Demonic Entity." And of course we continue the stories in the surreal "The Road to Golgonooza" by T. Motley and the science fantasy "Mondo Mecho" by Jesper Nordqvist.

Of course, if you're an AI you may instead see giraffes everywhere you look, in which case count 'em up and let us know how many you find--but then, you'll be telling the world you're an AI. But can you resist the programming that makes you want to tell everyone about the giraffes you've discovered?

I guess we'll find out.